It is all in the prompt

Posted on 9th April 2024.

A prompt is the input text that you give an LLM. It can be a query about a subject, a summarization task, a creative task, a logical question, or just about any form of conversation that you may think to have with an intelligent and knowledgeable entity. In essence, LLMs are designed to communicate much like humans with text inputs (prompts) and text outputs (responses). Some LLMs also take in images and other files as inputs and may return output that is also more complex. For this blog post let us focus on text only and leave the discussion of multimodal settings for a different day.

Now just like the fact that inter-personal communication is a skill that may require practice, thought and care; communication between a human and an LLM also needs some care. This is the area of prompt engineering which is all about finding the best ways to command or query your LLM to do what you want, in a as consistent and reliable a manner as possible.

When you aim to customize an LLM for your needs, devising the right prompts is one of the first tools at your disposal. In this post we outline some of the key prompting guidelines for the major LLMs. Some of these guidelines are useful for a user while others are useful for developers that tweak and adjust private business LLMs or other LLM-based products.

"The hottest new programming language is English"

LLM and deep learning expert Andrej Karpathy has a pinned influential tweet saying "the hottest new programming language is English". With this, he clearly means that you can now use plain English (or sometimes other human languages) as a form of a programming language.

No, this is not the death of computer programming languages. However it is the birth of a paradigm of computing where you can ask LLMs to carry out quite formal tasks. For example consider this input prompt:

Here is a list of countries: {Canada, Finland, China, Israel, Vietnam, Australia, Netherlands}. For these countries, give me a list of the form {(Country, Capital), ...} where for each of the country, you provide the capital. Just provide the answer and not any supporting text.

When we tried this prompt on the commercial models GPT-4, GPT-3.5, Gemini Pro and laude-3 Sonnet, the answer was exactly as expected on all of them:

{(Canada, Ottawa), (Finland, Helsinki), (China, Beijing), (Israel, Jerusalem), (Vietnam, Hanoi), (Australia, Canberra), (Netherlands, Amsterdam)}

This is exactly what a programmer with access to a database of countries and their capitals would produce given this information. Except in this case, the information was stored in the LLM, and we only had to write instruction in English to instruct it on what to do. In addition to the commercial (large) models, the same exact output also resulted when we used the open source Mixtral model on our Accumulation Point GPU-accelerated server.

Now when we used smaller open sourced models on a laptop we got some slightly different responses. For example with Mistral 7B we got:

(Canada, Ottawa), (Finland, Helsinki), (China, Beijing), (Israel, Jerusalem), (Vietnam, Hanoi), (Australia, Canberra), (Netherlands, Amsterdam)

While this is semantically correct the { and } brackets were missing. The when we used Llama2 7B we got:

Sure! Here is the list of countries and their capitals:

{Canada, Ottawa}

{Finland, Helsinki}

{China, Beijing}

{Israel, Jerusalem}

{Vietnam, Hanoi}

{Australia, Canberra}

{Netherlands, Amsterdam}

This response, while again being semantically correct, is not exactly what we asked for, because it did not follow the prompt and omit all supporting text. Even running the command a few more times did not yield the right output. (In general, LLMs are injected with some randomness through a mechanism that is outside the scope of this blog post. This means that performing the same query several times does not in general return the same output. The level of randomness can sometimes be adjusted by adjusting the temperature parameter).

So as we see: yes, English is showing to be some form of programming language, but it is not as controllable and deterministic as other paradigms of programming computers, especially when the task at hand gets a bit more complicated.

Now say we have to use Llama2 7B, because that is the compute that we have, or due to some other constraint. A challenge is then: is there a better prompt that we can give it so that it will do exactly what we want?

It turns out that for our example with Llama2 7B, we can achieve the desired output via this slightly more complicated prompt:

I want a short answer without any additional text, exactly in the form:

{(country1, capital1), (country2, capital2), ...}

For example for the countries Lebanon and France, your response would be:

{(Lebanon, Beirut), (France, Paris)}

without any other text.

Now give me the response for the countries: Canada, Finland, China, Israel, Vietnam, Australia, Netherlands.

It yields the desired output on Llama2 7B, just like with the bigger models. We arrived at this prompt via trial and error.

Prompting guides for the big commercial LLMs

The lesson from the simple example above is that by careful prompting or prompt engineering, we may be able to produce output that is more reliable and more desirable for our application, but this requires some thought, care, and often some trial and error. It also shows that in general, different LLMs respond differently to different prompts. A good reference for this are the prompting guides from the three big commercial LLMs by OpenAI, Google, and Anthropic:

We recommend that you browse each of these pages to get a feel for a better understanding of the nuances of prompting for these LLMs.

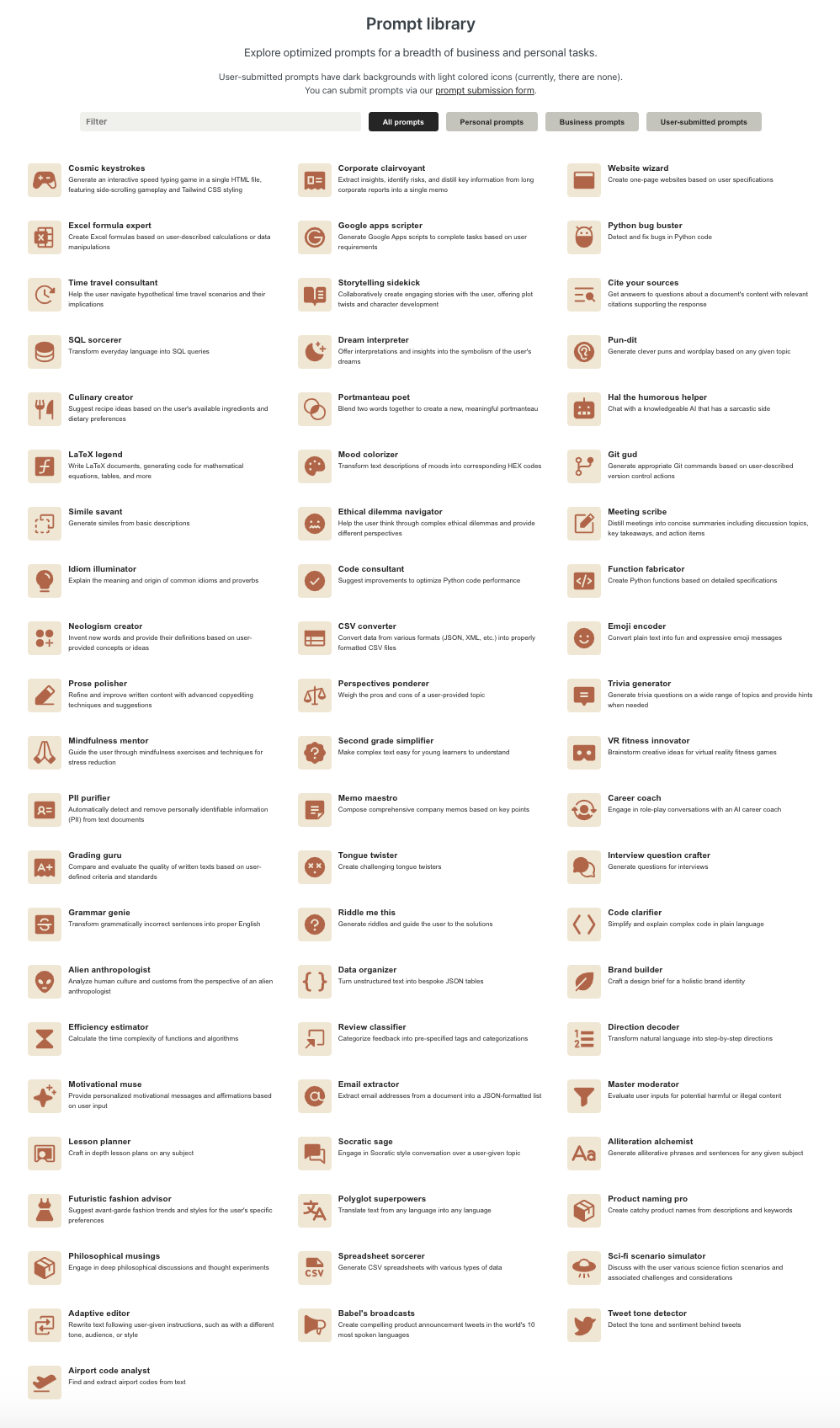

The LLMs of each vendor were instruction tuned in their own unique way. This means that in principle the guide for each vendor is suited for that particular LLM. Nevertheless, prompting ideas from each of these LLMs can be generally be applied across platforms, and also on open sourced LLMs. For example as part of the Antropic docs, you will find their lovely prompt library. The general prompts in this library can be used across LLMs.

These prompting guides actually contain quite a bit of information.

User prompts, Assistant Prompts, and System prompts

When you follow a prompting guide you will quickly notice that some prompts are user prompts, some are system prompts, and some are assistant prompts. This is evident via the role in the OpenAI and Anthropic documentation (Google has different terms and seems to still be evolving).

The user is simply the person (or person-like system) engaging with the chat. The assistant is the LLM responding. The system is an overarching prompt for the LLM instructing it how to behave. The simplest prompting sequence has simply a single user prompt and receives an assistant response. But in more complex prompts, which can also result from typical back-and-fourth chat conversation, the prompt can involve a sequence of user and assistant messages. That is, you show the LLM how it should have responded as assistant.

Most prompting strategies include the system message which is the one time system prompt the LLM gets. It puts the LLM in the "right head space" for answering the question like you want. For example look at this example that we created in OpenAI's Playground, suited for a create task of suggesting product names based on a product description:

As you can see, you set the System prompt once on the left hand side, and then engage in chat going back and fourth between user prompt and LLM responses (assistant prompts). Note that every invocation of a new user prompt puts the system prompt and the whole history of user prompts and assistant prompts as input to the LLM. Specifically, if you feel comfortable with LLM APIs as described here, this would be the API call associated with the example above.

from openai import OpenAI

client = OpenAI()

response = client.chat.completions.create(

model="gpt-3.5-turbo",

messages=[

{

"role": "system",

"content": "You are a system which suggests product names for engineering products. For every description of an engineering product that the user presents you give a numbered list of 5 short names that may suit this product "

},

{

"role": "user",

"content": "A device for monitoring motor temperatures of excavators. "

},

{

"role": "assistant",

"content": "1. HeatGuard Pro\n2. ExcavaTemp Watch\n3. MotorTherm Pro\n4. Digisense TempTrack\n5. ExcavHeat Monitor"

},

{

"role": "user",

"content": "A robotic level device which moves around new constructions and checks that surfaces are level."

},

{

"role": "assistant",

"content": "1. RoboLevelInspect\n2. ConstructoScan\n3. LevelSentry Bot\n4. SurfaLevel Rover\n5. BuildCheck Pro"

}

]

)

We'll also mention that when you run open LLMs you can also set system prompts. See the documentation of the popular model runners Ollama or LM Studio to see how this is done.

Where to from here?

LLM prompts and prompting techniques will surely continue to evolve as LLMs evolve. Yet, the eco-systems are already mature enough to be able to tweak prompts for your desired needs. When wrapping LLMs inside your private business LLMs your taylor made software may also massage prompts for specific purposes.

© 2018 – 2025 Accumulation Point Pty Ltd. All rights reserved.