Commercial LLMs and their APIs

Posted on 19th March 2024.

Find supporting Python code demonstrating each of these APIs at the llm-hello-world GitHub repository.

In this blog post we briefly visit the APIs of the currently most popular commercially available LLMs (post updated March 19th). Specifically we review the APIs of OpenAI, Google (Gemini), Anthropic (Claude), Cohere, Mistral, and Perplexity. We outline the basics of each of these APIs.

Here are links to the relevant API documentation pages:

- OpenAI API overview

- Gemini docs and API reference in Google AI for developers

- Anthropic Claude API reference

- Cohere's API reference

- Mistral AI API

- Perplexity's pplx-api

Why are we focusing on closed commercial products in this post?

Our focus with these blog posts is to support industry with applying LLMs in their businesses. For this, often due to issues of security and privacy we believe that using non-commercial engines on premises can be the safest choice. With such solutions you do not have to send your private data out of your physical or virtual infrastructure. Hence using commercial APIs such as those that we cover here potentially bear some risk.

Nevertheless, all of these commercial vendors provide privacy agreements. Further, our motivation for first covering the APIs of commercial products is that these APIs provide functionality that leads the industry and often motivates open sourced products that follow. Hence getting a bird's eye view of what these APIs provide is useful.

The main functionality to expect

What do we expect from an LLM API? In an nutshell, it is a mechanism for your applications and programs to use the computation power of the LLM provider with their specific model, running on their servers. Someone from your team or an external consultancy will develop your LLM business application for you, and your application will use the API to query the LLM itself.

The main types of activities and interfaces that the API provides are:

- Basic messages/chat - This is the main functionality where we send prompts and get responses. In most cases the prompts and responses are textual, yet for certain vendors they can also include images or other modalities.

- Function calling - This is a case where we enable the LLM to interface with external functionality such as a calculator, or a web search. Your application can define custom functions and the API will give your application the "instructions" to use the functions as the need arises. When orchestrated well, this can result in very powerful functionality where your LLM application can now rely on other computation which is external to the LLM. LLMs are not built to execute code, do arithmetic, or other algorithmic or data retrieval tasks; plugging in an external function for this gives the LLM much more power to respond to queries.

- Embeddings - Here we send text and get an embedding vector in response. This is often useful as a building block for retrieval, semantic search, and RAG applications.

Note that in addition to the main fully functional commercial LLM APIs that we cover, there are also vendors that specialize via their API almost exclusively on embeddings. One choice which seems to have gained popularity is Nomic Embed.

Beyond messages, function calling, and embeddings you can expect APIs to provide other functionality. However, messages, function calling, and embeddings are the smallest core common denominator, so we will discuss these three below.

In almost all cases, using the API is based on an API key. You get this secret key from the vendor and then your business application uses it with your API calls. This API key allows the LLM vendor to count your API calls and bill you appropriately.

Similarly, most communication with the API is via HTTPS requests which are either carried out directly or are performed through a programming language. For example the following is taken from OpenAI's documentation and in this case uses the cURL command to send HTTPS requests.

curl https://api.openai.com/v1/chat/completions \

-H "Content-Type: application/json" \

-H "Authorization: Bearer $OPENAI_API_KEY" \

-d '{

"model": "gpt-3.5-turbo",

"messages": [

{

"role": "system",

"content": "You are a poetic assistant, skilled in explaining complex programming concepts with creative flair."

},

{

"role": "user",

"content": "Compose a poem that explains the concept of recursion in programming."

}

]

}'

You don't have to focus on everything in this command but note that what it does is an HTTPS request to https://api.openai.com/v1/chat/completions with a specific message which also includes the API key inside the environment variable OPENAI_API_KEY. After a system message, this API call asks the GPT 3.5 Turbo LLM the following: Compose a poem that explains the concept of recursion in programming.. When you issue this request, OpenAI will dispatch its servers to compute the result to your query, and the answer is returned in a response. Now when your business application LLM is developed, the developers will create API calls like this, either directly via HTTPS or using libraries in Python (or other languages) that wrap such calls. As further code examples, see our Python examples in the llm-hello-world GitHub repository.

For each vendor we now provide links to key functionality and summarize a few notes. We link to the page for getting an API key, to the message passing API, to the function calling API (when available), to the embeddings API (when available), and to a pricing page (where relevant). We also outline what programming languages are supported via a library in addition to the basic cURL interface. Note that even in cases where your desired programming language is not supported by the API, you can always use the cURL interface programmatically. Finally we list other API capabilities and features.

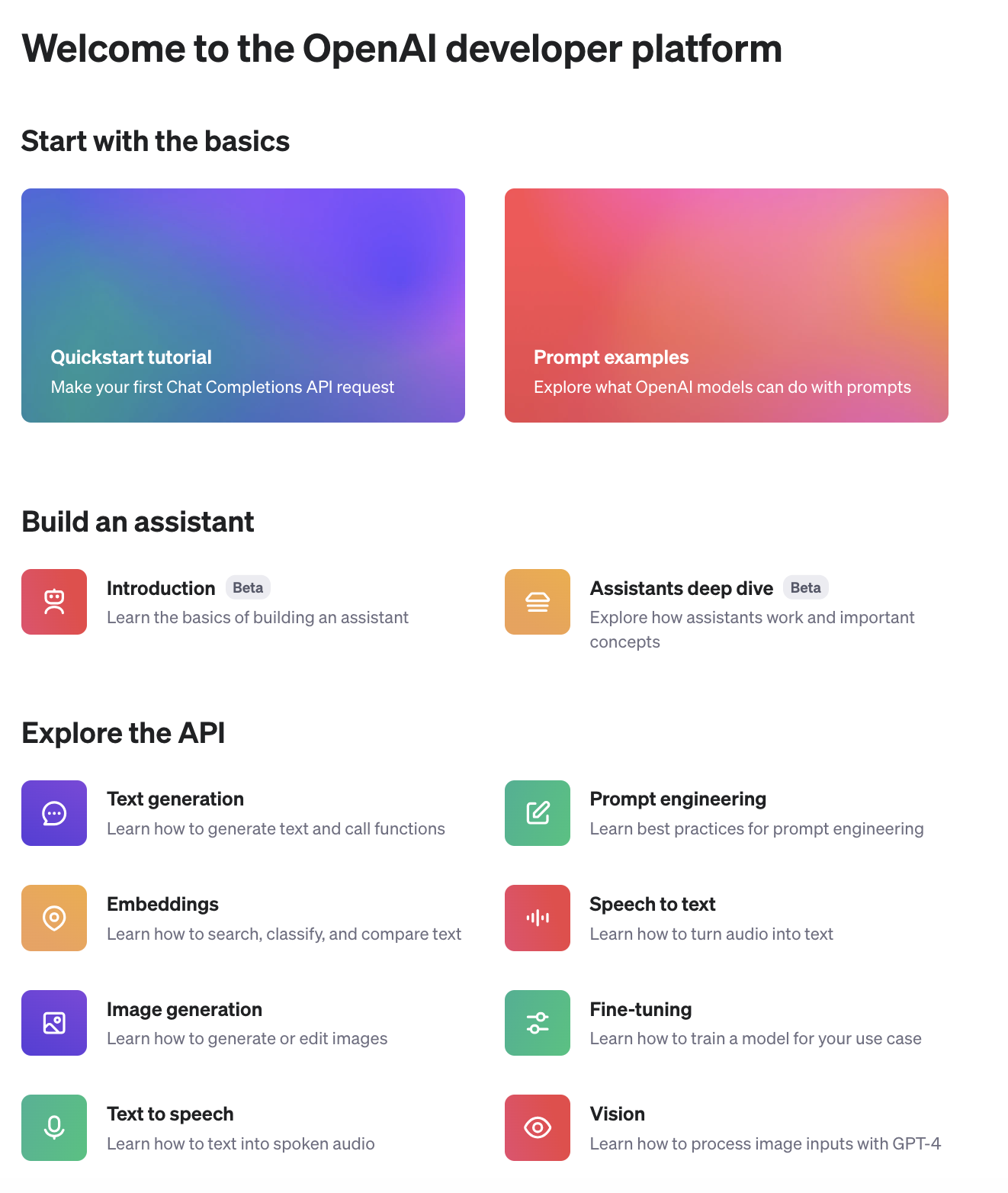

OpenAI

OpenAI is the most mature commercial vendor of LLMs and has led the way with its ChatGPT products. Its GPT-4 has been leading most score boards up to recently.

- Create an account

- Get API key

- Message passing: This is called the chat completions API. There is also a JSON mode which explicitly forces the API to return responses in JSON. A legacy variant of this API is called the completions API.

- Function calling: This also falls within the general category of OpenAI's API under assistants called tools. In particular two functions (or tools) that are built-in to OpenAI are the code interpreter and knowledge retrieval.

- Embeddings: Embedding models are

text-embedding-3-small, the more expensivetext-embedding-3-large, as well astext-embedding-ada-002. - Pricing: The pricing page has clear details on pricing for messages, assistant API tools, embeddings, and more.

- Programming languages supported: Pyton and Node.js (Javascript).

- Other API capabilities, features, and notes: Fine-tuning, vision, image-generation, text-to-speech, and more.

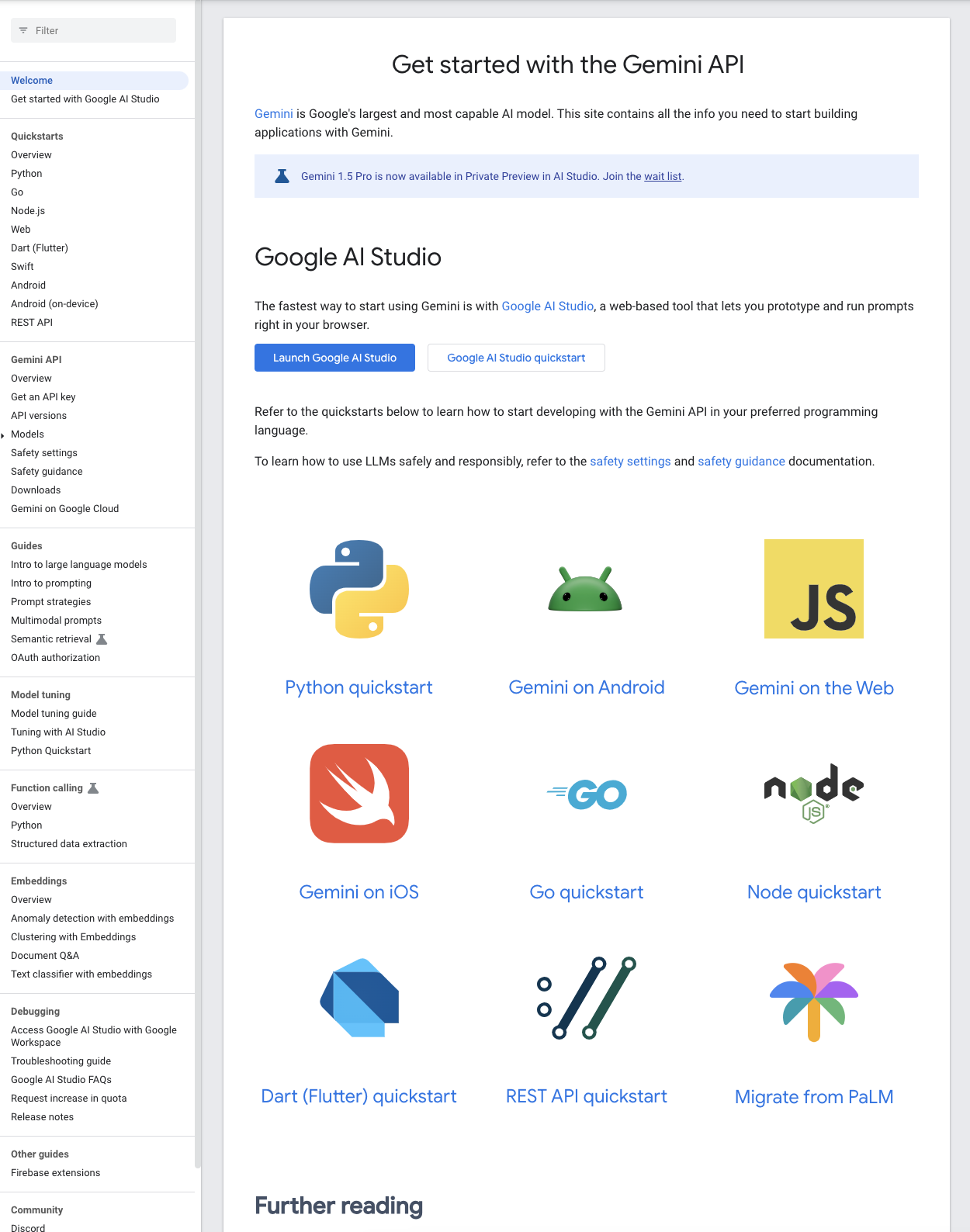

Google Gemini

Google is a well oiled software and innovation machine with plenty of APIs for other products and their Gemini API fits right in. Their recent release of Gemini 1.5 boasts a massive context window and their API builds on the previous PaLM API (PaLM is Google's older language model).

- Get API key

- Message passing

- Function calling

- Embeddings

- Pricing

- Programming languages supported: Pyton, Go, Node.js (Javascript), and mobile device app languages.

Anthropic Claude

Anthropic presents very clear API documentation with a slick look for their API reference. Their recent release of Claude 3 which seems to beat GPT-4 on certain benchmarks also places them as a competitive choice. Claude 3 provides three models with increasing complexity and cost, Haiku, Sonnet, and Opus.

- Get API key

- Message passing

- Function calling: Still under development.

- Embeddings

- Pricing

- Programming languages supported: Pyton and Javascript.

Cohere

Cohere is not a standard LLM vendor as it also provides other NLP services and their LLM is aimed at supporting RAG applications and others. This is reflected in their API documentation.

- Get API key

- Message passing

- Function calling

- Embeddings

- Pricing

- Programming languages supported: Python and Node.js (Javascript).

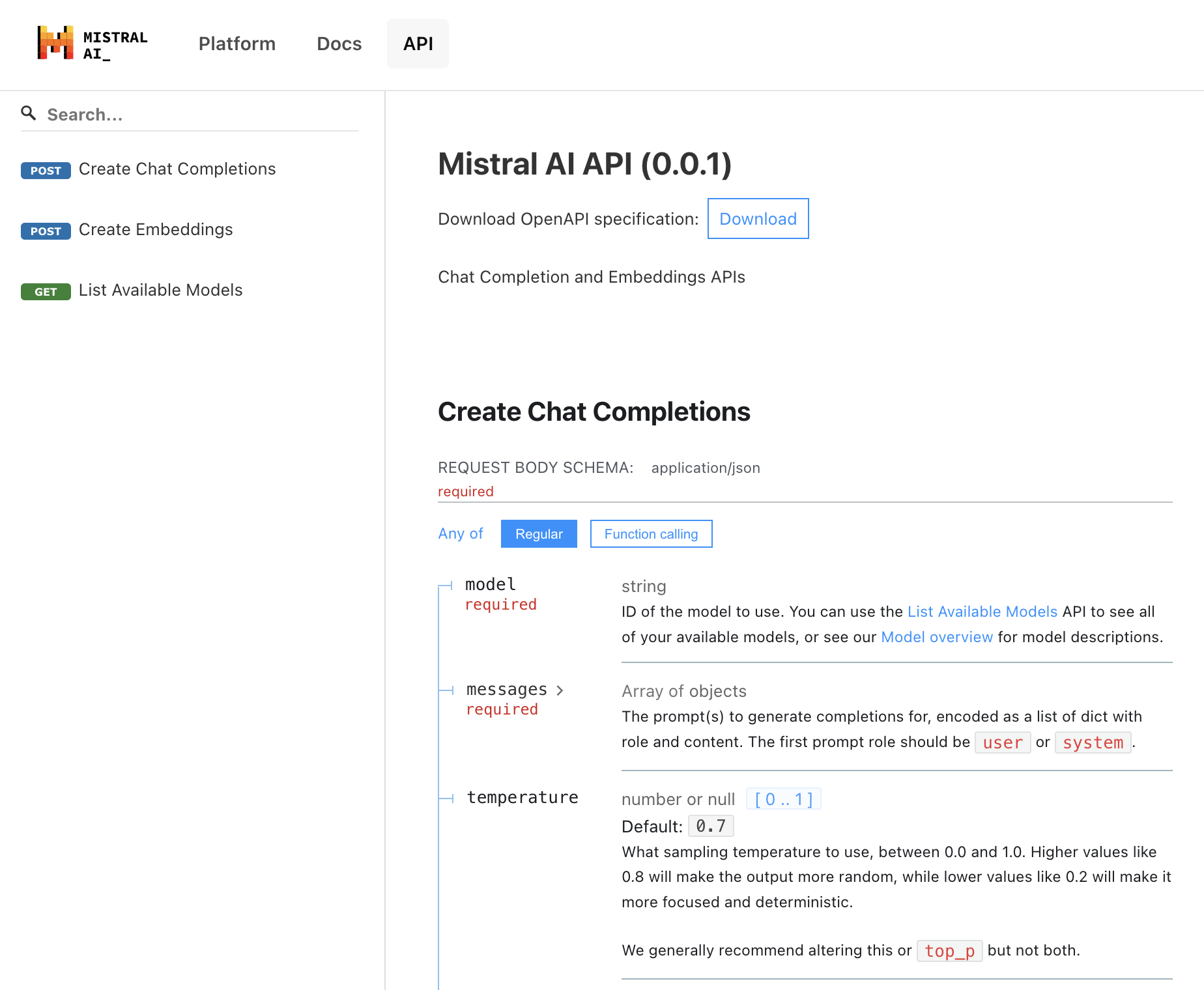

Mistral

Mistral is known for excellent open LLMs, and in terms of closed models the recent Mistral Large was released. While the open models (not through an API) from Mistral AI have been very successful, the commercial models' API reference docs are still not mature at the time of this writing.

Mistral does not have free API access; you will need to input your credit card details.

- Get API key: First you need to register with Mistral then you can make an API key.

- Message passing

- Function calling: Not available yet.

- Embeddings

- Pricing

- Programming languages supported: Pyton and Javascript.

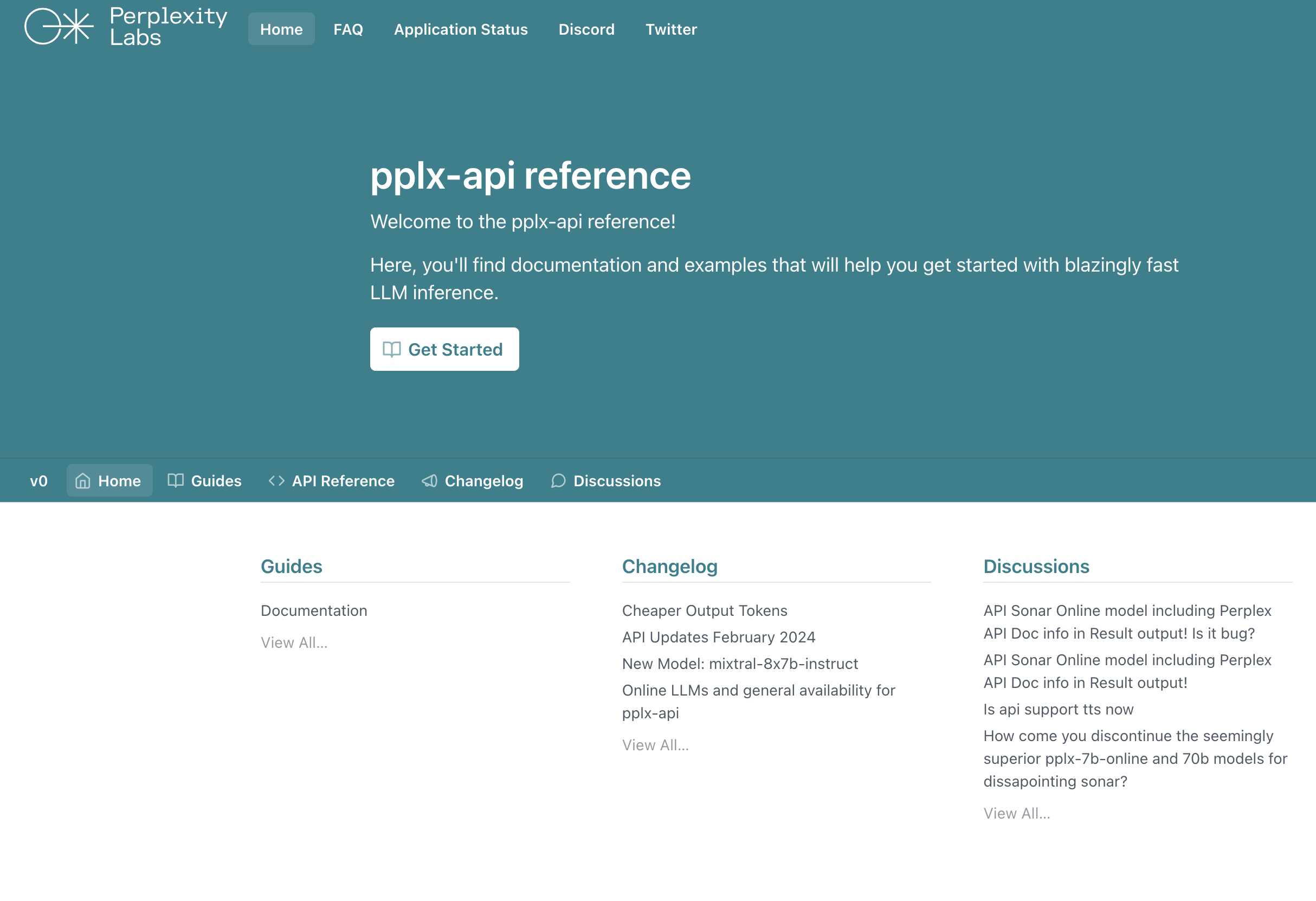

Perplexity

Finally, the Perplexity model also has an API. Perplexity is more of a user facing product, yet their API appears mature and well documented.

Perplexity does not have free API access; you will need to input your credit card details.

- Get API key: First you need to register then you can make an API key.

- Message passing

- Function calling: Not available.

- Embeddings: Not available.

- Pricing

- Programming languages supported: Python, Node.js(Javascript), Ruby, and PHP.

Other commercial LLMs

Another promising commercial LLM is Inflection which at the time of this writing has not yet exposed their API. Yet they say it is in the planning.

We also mention Clarifai which provides a mature API service for multiple ML/AI tasks including LLMs (wrapping other commercial LLM APIs). There is also Groq's API which should be of interest. Groq is a specialized hardware platform for LLMs that is very fast and efficient. At the moment, Groq is not tied to any specific commercial LLM and instead their API allows you to use several open models on their hardware.

Where to from here?

The LLM arena will continue to quickly evolve in 2024, yet APIs are beginning to stabilize. Both commercial LLMs and open LLMs (not covered in this post) are already mature and ready for integration within business applications.

If you are comfortable with basic programming environments, you can get a feel for APIs by making API calls on your own (see our examples).

What is this good for? The next step would be to consider how you would integrate such commercial LLMs in your business applications. See also our blog post about where to start to help you further consider your steps.

© 2018 – 2025 Accumulation Point Pty Ltd. All rights reserved.